A new deep-learning framework developed at the Department of Energy’s Oak Ridge National Laboratory is helping to speed up the process of inspecting 3D printed metal parts using X-ray computed tomography (CT). It’s also reducing costs for time, labor, maintenance, and energy.

“The scan speed reduces costs significantly,” said ORNL lead researcher Amir Ziabari. “And the quality is higher, so the post-processing analysis becomes much simpler.”

In the Department of Energy’s Manufacturing Demonstration Facility, the framework is already being incorporated into software used by commercial partner ZEISS within its machines. Companies are using the ZEISS machines to hone 3D-printing methods.

Taking the next step

ORNL researchers had previously developed technology that can analyze the quality of a part while it is being printed. Taking the next step, and imaging the part after printing ensures a qality outcome.

“With this, we can inspect every single part coming out of 3D-printing machines,” said Pradeep Bhattad, ZEISS business development manager for additive manufacturing. “Currently CT is limited to prototyping. But this one tool can propel additive manufacturing toward industrialization.”

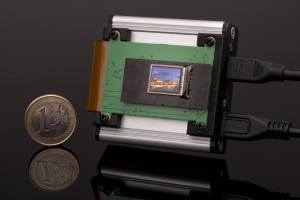

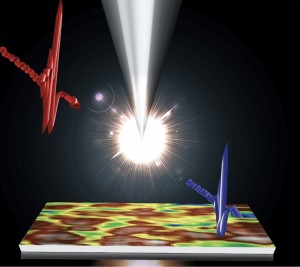

X-ray CT scanning can certify the soundness of a 3D-printed part without damaging it. Just like in a medical application, where the CT scan uses x-rays to make detailed cross-sectional images of the body, it scans an object set inside a cabinet. The scanner takes many more pictures than a standard x-ray, and a computer combines them to show a cross section of the lungs. The advantage of a CT scan over an x-ray is that the images creates a 3D image showing the size, shape, and density of the part, making it useful in detecting defects, analyzing failures or certifying that a product matches the intended composition and quality.

X-ray CT has not been used at large scale in additive manufacturing because current methods of scanning and analysis are time-intensive and imprecise. Images can be inaccurate due to metals absorbing the lower-energy X-rays in the X-ray beam, and there may be cracks or pores that cannot be seen. A trained technician can correct for these problems, but the process is time- and labor-intensive.

Generative adversarial network

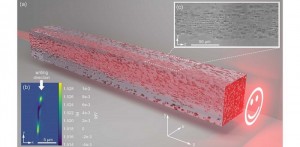

Ziabari and his team developed a deep-learning framework called a generative adversarial network, or GAN. This class of machine learning is used to synthetically create a realistic-looking data set for training a neural network, leveraging physics-based simulations and computer-aided design. GAN uses neural networks competing with each other as in a game. According to Ziabari, until now, GAN has rarely been used for practical applications like this.

The framework developed by Ziabari’s team would allow manufacturers to rapidly fine-tune their builds, even while changing designs or materials. With this approach, sample analysis can be completed in a day instead of six to eight weeks, Bhattad said.

“If I can very rapidly inspect the whole part in a very cost-effective way, then we have 100% confidence,” he said. “We are partnering with ORNL to make CT an accessible and reliable industry inspection tool.”

Researchers at Oak Ridge National Labs evaluated the performance of the new framework on hundreds of samples printed with different scan parameters, using complicated, dense materials. These results were good, and ongoing trials at MDF are working to verify that the technique is equally effective with any type of metal alloy, Bhattad said.

Training a supervised deep-learning network for CT usually requires many expensive measurements. Because metal parts pose additional challenges, getting the appropriate training data can be difficult. Ziabari’s approach provides a leap forward by generating realistic training data without requiring extensive experiments to gather it. Ziabari will present the process his team developed during the Institute of Electrical and Electronics Engineers International Conference on Image Processing in October.

Written by Anne Fischer, Editorial Director, Novus Light Technologies Today

Back to Features

Back to Features