Over the past two decades, many manufacturers have sought to ease the software development burden faced by vision systems integrators. Much of the effort to do so has involved the creation of software tools that enabled systems developers to reduce the time and effort required to bring their systems to market.

Today, certain integrated software development environments (IDE) allow systems integrators to design graphically instead of writing traditional program code. In addition to graphically specifying the application logic, these also enable users to directly design a graphical operator interface for the application.

To complement such development environments, suppliers also provide comprehensive toolkits that are replete with many software functions for developing machine vision applications. These toolkits feature standard programming functions for image capture, processing, analysis, annotation, display and archiving, relieving the software developer from the burden of writing custom code for those functions.

Deep learning

More recently, the benefits of using a deep learning system to address the complexities involved in inspecting intricate parts has become evident. Hence, many vendors of integrated software development environments are now racing to determine the optimum way to create Convolutional Neural Networks (CNNs) that can be incorporated into their environments as functional elements. Their aim is to simplify the use of such complex software such that they might become as straightforward as specifying less complex functions such as edge detection or pattern matching.

One of many such vendors aiming to do just that is Matrox Imaging. At the Vision Show in Stuttgart last year, representatives from the company were on hand to discuss a demonstration system that they had built to inspect containers of dental floss using a deep-learning-based classification tool available in the newest version of Matrox Imaging’s flowchart-based software, Matrox Design Assistant X (see above).

The choice to inspect dental floss containers was made to specifically highlight the challenges faced by systems integrators who may be building vision systems to inspect similar assemblies. Notably, the system was developed to detect the presence and condition of the filament of waxed dental floss which is presented across the top of the package. This was a challenge because the floss itself (which is supported between two small metal supports) has the same color or intensity as the body of the container itself. Not only did the demonstration highlight the effectiveness of a CNN to solve this challenge, it also highlighted other advantages of other tools within the Design Assistant vision software.

According to Arnaud Lina, the Director of Research and Innovation at Matrox Imaging, the deep learning system was chosen over more conventional image processing means because conventional software systems are limited in the fact that they make pass (or fail) predictions using only a handful of basic features in a relatively straightforward way using clearly defined rules. Deep-learning-based software systems, on the other hand, can work with a complex combination of features in an image, and can cope with the variability of these features and the complicated relationships between them. A deep-learning-based system can therefore be more adaptive, in that it can accommodate for natural or acceptable variations in processes.

System software

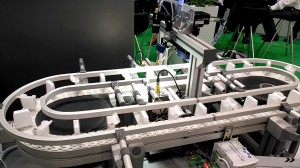

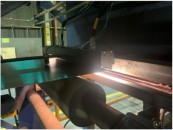

On the hardware front, the demonstration system itself comprised a small conveyor that presented the containers of dental floss to an inspection station. As the dental floss containers approached the inspection station, they triggered a Banner World-Beam QS-18 photoelectric sensor photoelectric sensor. The trigger signal, which was sent to a Matrox 4Sight GPm vision controller, indicated that the container of dental floss was present under the field of view of a Power-over-Ethernet FLIR Blackfly S 1.6MPixel camera mounted vertically above the container in the inspection station. Once the signal from the sensor had been received, the industrial computer then triggered the camera to capture an image of the top of the floss container whilst the container itself was illuminated by a Smart Vision Lights LM45 mini linear light.

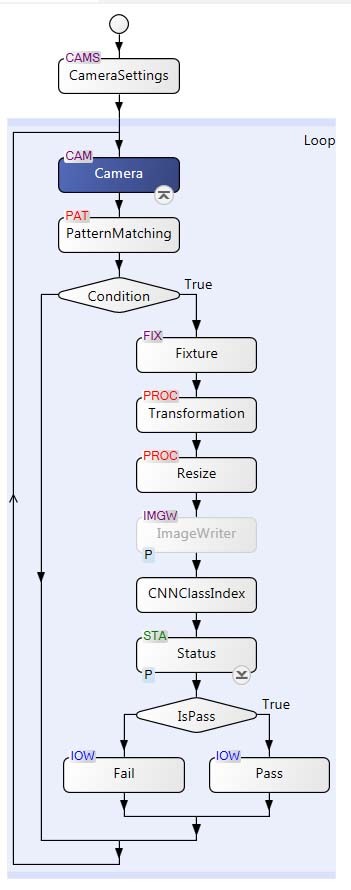

As the images were captured by the camera, they were transferred over a GigE interface to the 4Sight GPm industrial computer for processing. To enhance the performance of the system, the actual image acquisition process ran concurrently and transparently with the processing of the images. Vision tools built in the Matrox Design Assistant X flowchart-based software environment then determined the condition of the dental floss present between the small metal supports on either side of the floss containers. To reduce the burden of computation placed on the CNN, a series of software operations were first performed on the image (Figure 2).

Figure 2:

Software built in the Matrox Design Assistant X flowchart-based software environment determined the existence and condition of the dental floss present between an opening and a small metal support on either side of the floss containers. To reduce the burden of computation placed on the CNN, a series of software operations were first performed on the image.

The first of these was a conventional pattern matching recognition operation that determined whether an image of a container had actually been captured by the camera, and hence whether the container itself was present. Matrox Design Assistant includes two steps for performing pattern recognition: Pattern Matching and Model Finder. The Pattern Matching step finds a pattern by looking for a similar spatial distribution of intensity, while the Model Finder step employs a technique to locate an object using geometric features (e.g., contours).

If the container was present in the image, a virtual fixture was then created to establish a relative co-ordinate system from the global co-ordinate system of the object. That relative co-ordinate system could then move with respect to the global co-ordinate system. This step involved attaching a set of local co-ordinates to the image of the object from which the rest of the parts of the image could then be referenced in subsequent processing steps. The next steps – the transformation and resizing operations – were then performed relative to the fixture prior to processing by the CNN.

Many types of CNNs could be used to process such images, but a basic CNN used for image classification requires that images presented to it are of a fixed image size. Hence, by transforming and resizing the image using tools in the Matrox Design Assistant toolbox before they are presented to the CNN for classification, the developers of the system could ensure that this was indeed the case. One benefit of this approach is that it not only eases the computation workload of the industrial computer during the inspection process, but also during the training process in which the CNN is presented with good and bad images and taught to identify the difference between them.

Reducing complexity

According to Pierantonio Boriero, the Director of Product Management at Matrox, the more images that are used to train such a CNN tool, the greater the accuracy of the system is likely to be. However, he believes that while system developers might want to reap the benefits of using such a tool in their vision armory, they might possibly be dissuaded to do so by the sheer number of images that may be required to be acquired and presented to the CNN in order to train it.

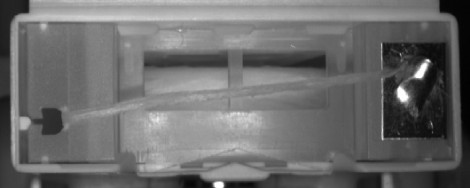

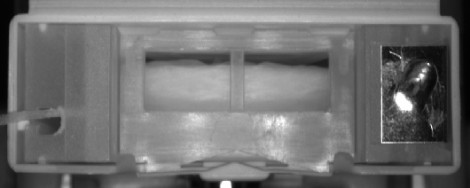

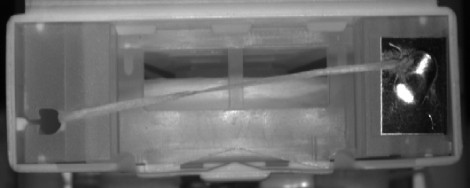

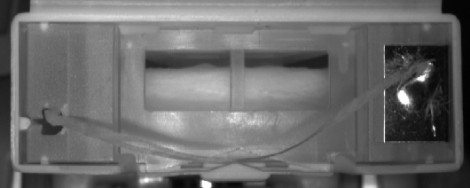

In the case of the demonstration system used to inspect for the presence of dental floss in containers, the Matrox team accomplished their goal of identifying good containers from bad with an accuracy of 98 per cent by training their custom CNN tool with just 1,000 training images of both acceptable and unacceptable products. The images were physically taken under varying conditions of illumination, camera positioning, lens focus, and dental floss placement. Once trained, the CNN could then classify the containers and the product could be flagged as having passed or failed the inspection by the Matrox Design Assistant software (Figure 3).

(a) Correctly placed

(b) Missing

(c) Twisted

(d) Misplaced

Figure 3: Using a CNN, the Matrox demonstration system was able to distinguish between containers with correctly placed floss (a), missing floss (b), correctly placed but twisted floss (c), misplaced floss (d).

If the demonstration system had been a real production system, products failing the inspection would then be ejected from the conveyencing system.

Arnaud Lina, the Director of Research and Innovation at Matrox Imaging, says that simplifying the creation, or more accurately the adaption, of CNNs is one of the ultimate goals to enable users unfamiliar with the complexities of deep learning software to employ them effectively. Lina says that the Matrox development team is working on enabling users to so in a fashion that will be less complicated than many existing solutions.

Written by Dave Wilson, Senior Editor, Novus Light Technologies Today

Image at top: A small conveyor presents containers of dental floss to an inspection station. A camera then captures an image of the top of the floss container which is transferred to an industrial computer for processing. A CNN classifier within the Matrox Design Assistant X flowchart-based software environment then determines the existence and condition of the dental floss held in place on either side of the floss container.

Back to Features

Back to Features