Industrial machine vision systems employ cameras fitted with specialized lenses to acquire images of products passing along a production line. These images are processed by software running on a PC where objective measurements are made to determine whether the product meets the quality control requirements demanded by the manufacturer.

Today, the software used to extract the relevant features from an image of a product so that its integrity can be quantified, is either developed by a machine builder, or, more likely, supplied to the OEM in the form of standard algorithms from a third party. These third party building blocks can then be automated in a workflow by the machine builder in a standard development environment to perform the functions required to build the application.

To date, the effectiveness of any vision system has relied on the ability of the system developer to select the algorithms that will be most efficient in extracting enough relevant data from the images to enable the different dimensions, features, or qualities of a product to be measured and correlated with predetermined tolerances by the manufacturer.

A new breed of software

However, in the future, a new breed of deep learning-based industrial image analysis software may provide a viable alternative to the traditional software techniques employed for inspection and classification.

If its proponents are to be believed, such deep learning software will be able to perform many, if not all of the same sorts of inspection functions as more traditional approaches-- from identifying complex features and objects as well as classifying them. In addition, it will enable machine builders to dramatically reduce the time spent developing their systems and speeding up their time-to-market.

According to Girish Venkataramani, a development manager at MathWorks, deep learning is implemented using neural network architectures. The term “deep” refers to the number of layers in the network - the more layers, the deeper the network. Traditional neural networks contain only three types of layers: hidden, input and output. Modern deep networks, on the other hand, have many additional feature detection layers that perform functions such as convolution, activation and pooling.

Training a network

While the first hidden layers might only learn what local edge patterns are in an image, each subsequent layer might learn what more complex features are. Hence, the network increases the complexity and detail of what it is learning from layer to layer. The last layer uses all these generated features to classify the image into a particular class.

“A deep learning system can be taught to identify different features in images through training. This is achieved by presenting the network with a set of labeled data from which the network can then categorize the images according to the features contained within them,” said Mr. Venkataramani.

One method used to build a deep learning system is to train a network from scratch.

While the approach may produce the most accurate results, it generally requires hundreds of thousands of images and considerable computational resources.

Using a pre-trained network

An alternative is to take a pre-trained network such as AlexNet and use it as a starting point to learn a new image classification task using a smaller number of images. Fine-tuning a network with transfer learning in such a manner is usually faster and simpler than training a network with randomly initialized weights from scratch.

AlexNet is a convolutional neural network that has been trained on more than a million images and can classify images into 1000 object categories. To fine- tune such a network using a smaller number of images, the new image data is first divided into two -- one set of images is then used to train the network and the other to validate it. If the training images differ in size to those that were originally used to train the network, then the new image data must first be resized or cropped. However if the training images are the same size this stage of the retaining process is unnecessary.

In the transfer learning process, the last few layers of an existing reference network such as ALEXnet are modified to recognize a specific number of new image classes. The network is then fine-tuned by making small adjustments to the weights of the last few layers of the network such that the feature representations learned for the original task are adjusted to suit the new task. After this, the network is retrained on the new dataset.

Such a pre-trained convolutional neural network model can be loaded into MATLAB using the company’s Neural Network Toolbox with just a single line of code. The Network Toolbox provides access to several pre-trained networks, including the popular AlexNet.

According to Mr. Venkataramani, once the pretrained AlexNet neural network has been loaded, the architecture of the network can then be tailored to meet the needs of the new application. Because AlexNet was originally trained to classify images into 1000 different categories, its final three layers will produce an output array of 1000 probabilities [indicating the likelihood an image belongs to that category].

The final layers must be altered for the new classification problem, perhaps to classify just ten or twenty different classes of images. A network is fine-tuned by making small adjustments to the weights of the last few layers of the network such that the feature representations learned for the original task are adjusted to suit the new task.

Using the Neural Network Toolbox, software developers can also specify several training options such as the speed at which the network will learn to identify a new class of image. The learn rate, for example, on the existing fully connected layer of the network can be set higher, with the result that learning on the new final layers is faster.

Three options: CPU, GPU or cloud-based

Currently, there are three options that a developer can use to train the network -- either with a CPU-based system, a GPU-based system or one that is cloud-based. By default, MathWorks network training software uses a GPU if one is available. Otherwise, it uses a single CPU.

“To enable software developers to take advantage of the inherent parallelism of the GPU, MathWorks recently introduced GPU Coder, a software product that automatically analyzes, identifies, and partitions segments of MATLAB code to run on either the CPU or GPU with the result that network training and classification can be accelerated. The fact that GPU coder produces highly optimized and efficient CUDA code that runs natively on the GPU can speed up both training and classification.” said Mr. Venkataramani.

Once the network has been trained, the classification accuracy, or the proportion of correctly classified instances in the test data, can be calculated by presenting the network with the test data. Sample test images can then be displayed with their predicted labels.

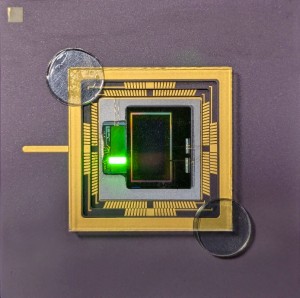

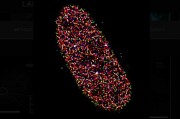

To illustrate the effectiveness of the transfer learning approach, engineers at MathWorks recently demonstrated how it could be used to build an application that could be adapted to identify the location and curvature of lane boundaries of visible lanes on a road. This was achieved by loading the AlexNet model into MATLAB, modifying it to meet the needs of the new application and then training the network on an NVIDIA Titan X (Pascal) GPU using a small set of labeled training data.

Once trained, the network was then able to accurately detect lane boundaries in a new series of images that were presented to it, despite the fact that only a limited number of samples were originally used to train it.

Written by Dave Wilson, Senior Editor, Novus Light Technologies Today

Top photo: Engineers at MathWorks recently built a system that could identify the location and curvature of lane boundaries of visible lanes on a road. This was achieved by loading the AlexNet model into the MATLAB environment, modifying it to meet the needs of the new application and then training the network on an NVIDIA Titan X (Pascal) GPU using a small set of labeled training data.

Back to Enlightening Applications

Back to Enlightening Applications