The touchscreen has fundamentally changed how we interact with handheld devices and other electronic systems by enabling us to use gestures to navigate menus. Next-generation user interfaces, however, are bringing gesture recognition to the next level, above the screen and even out into our living rooms through 3D Imaging.

One of the ubiquitous applications of large-scale gesture recognition is the Microsoft Kinect. This platform allowed users to employ full-body gestures in game play. Since then, many other applications have begun to tap into the capabilities of 3D Imaging to enable more advanced functionality and improve ease of use. For example, 3D gesture sensing will eliminate the need for multiple remote controls to use one’s home entertainment center. For mobile devices, 3D Imaging will augment camera capabilities to enable greater precision in object recognition and depth sensing.

Depth sensing

Depth sensing technology is the foundation of enabling gesture recognition above a touchscreen or out into a room. Depth can be computed using camera data and analyzing different views of a scene, through the use of multiple cameras, lens manipulation, and with or without active illumination.

Multiple cameras

Images from different camera angles can be used to develop a depth map based on parallax techniques. However, adding multiple cameras is undesirable given the significant increase in hardware cost and device size to place cameras far enough apart to be able to analyze a room. In addition, this approach analyzes object edges and has difficulty filling in flat regions within these edges.

Lens manipulation

A single camera can capture different views by adjusting its lens. However, this introduces latency based on mechanical responsiveness and requires higher CPU capacity to handle the complexity of comparing multiple hi-res images.

Active illumination

Active illumination enhances the images taken with the cameras by illuminating the field of view with patterned light and enables a system to determine flat and non-flat surfaces by evaluating how projected light changes shape as the light patterns change. Using light in the non-visible spectrum prevents flashing from degrading the user experience.

The challenge for designers has been that traditional active illumination techniques are hampered by ambient light (i.e., sunlight) and can only provide depth information as far out as the power of the light source can drive. In addition, they cannot provide accurate depth information in regions where there is insufficient variation in contrast or color.

Continuing innovation in lighting technology now enables developers to implement effective active illumination in ways that overcome the shortcomings of traditional approaches. Three types of light sources are currently being used to develop low-cost and reliable depth detection for 3D Imaging and cameras:

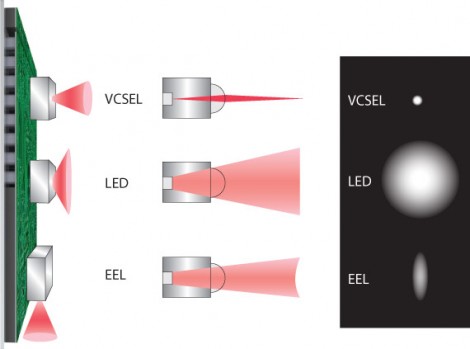

- Light Emitting Diode (LED): While a cost-effectively technology, LEDs emit light in 180 degrees. Thus, they must output more power to overcome losses. In addition, LEDs cannot be modulated quickly. This limits depth resolution and increases overall power consumption because of their increased flash duration.

- Distributed Feedback Laser (DFB): DFBs are a type of edge emitter laser (EEL) being considered for gesture recognition. However, DFBs are limited in that they tend to be fixed in their output power. Thus, to scale power, multiple DFBs must be mounted, increasing cost and device size. Additionally, output power scales in large steps, making it difficult to optimally match output power to an application’s requirements

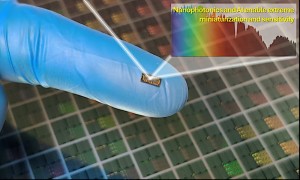

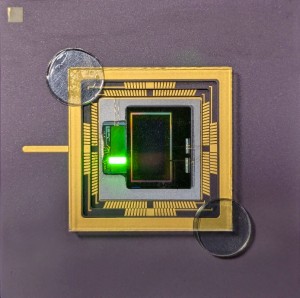

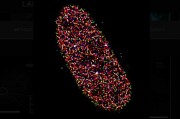

- Vertical-Cavity Surface-Emitting Laser (VCSEL): VCSELs are top-side emitting devices that can be mounted as dies on printed circuit boards. They can also be easily integrated with other optical components and simple optics to create a complete laser, driver, and controller in a single package. Their ability to be directionally focused, combined with wavelength stability over temperature, maximizes their output power efficiency. In addition, they can be integrated with other VCSELs to facilitate highly granular scaling as well as introduce light source redundancy to increase reliability.

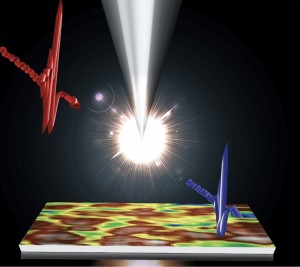

Figure 1: VCSEL, LED, and EEL light sources can be used to create the patterned light required for active illumination to sense the depth of objects both nearby and across a room. (Image from http://www.myvcsel.com/designing-with-vcsels/).

In many respects, gesture recognition and 3D imaging is still in its infancy but it promises to once again change how we interact with our devices. Although 3D imaging itself is in the early stages of development, it is being built upon a firm foundation of proven technology. VCSELs, for example, have been used in communications applications for over 15 years and deployed in more than 500 million devices.

OEMs who embrace 3D imaging today will position themselves at the top of the next wave.

Now is the time for companies to establish leadership in 3D Imaging technology. When touchscreen-based gesture recognition was first introduced to handsets, devices utilizing this technology dominated the market. OEMs who embrace 3D imaging today will position themselves at the top of the next wave.

By Pritha Khurana, Product Line Manager, Finisar

Back to Features

Back to Features