Lidar is quickly becoming an enabling feature for the autonomous vehicles market. In fact, many believe lidar is the most important optical technology in the race toward mainstream self-driving cars. But not all self-driving vehicles are fully autonomous. Lidar itself is the differentiating technology between vehicles that are not fully autonomous, and those that are. Though most modern vehicles include radar for blind spot detection and cameras for increased visibility, they are considered level 2 autonomous vehicles by the SAE International Standards, which are not fully autonomous. Fully autonomous vehicles, at levels 4 and 5, require multiple mechanical and optical sensors to see the road, including radar, cameras, and lidar. Lidar is more precise than radar and cameras, and it can be used to create precise 3D images of both moving and stationary objects. Because lidar is so critical to self-driving cars becoming a mainstream reality, nearly every major player in the autonomous vehicles market is incorporating lidar into its vehicles.

Solid-state lidar solves industry challenges

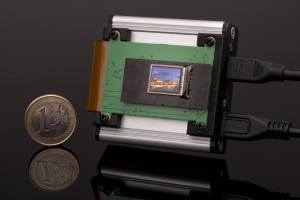

To date, one of the main challenges of incorporating lidar into self-driving cars has been that the lidar systems required mechanical mirrors with 360-degree movement. These spinning lidar systems were mounted on the rooftops of the first autonomous vehicles, but they were sensitive to vibration, difficult to miniaturize, and overall, were too expensive. However, recent developments are improving the durability of lidar systems and allowing prices to drop significantly.

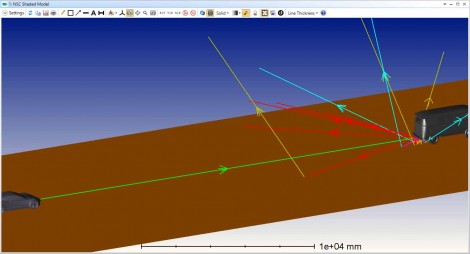

Figure 2. An OpticStudio model showing a lidar light source mounted on the front bumper of a car and reflecting off of a van about 20 m away.

Most of the innovation of lidar systems in the automotive industry is focused on creating solid-state systems and reducing the movement of the mechanism that scans the laser beam across the field of view. Though this typically results in a more limited field of view, the new solid-state lidar systems meet the technical requirements for long-distance scanning—and because there are no moving parts, they’re also more durable and less expensive than the previously used mechanical scanning systems.

Development of these solid-state lidar systems is helping to drive toward the anticipated 25% lidar market growth by 2022. Furthermore, that projected growth is spurring heavy competition, which will continue to reduce the cost to integrate solid-state lidar systems into autonomous vehicles.

Fierce rivalry in the industry

At this year’s Consumer Electronics Show (CES), sensor technology company LeddarTech showcased what it calls “the world’s first lidar integrated circuit (IC) to enable high-volume deployment of solid-state 3D lidars”—and the technology was twice named a CES 2018 Innovation Award Honoree. Innoviz announced InnovizOne, an automotive-grade, low-cost lidar coming in 2019, and unveiled InnovizPro, a standalone solid-state, MEMS-based scanning lidar system.

Also at the show, automotive lidar pioneer Velodyne announced Velodyne Velarray, a cost-effective, high-performance, solid-state lidar that enables hidden and low-profile sensing for a range of advanced driver assistance systems (ADAS) and autonomous applications.

And recently, Quanergy opened an automated factory in Sunnyvale, California, to produce the company’s S3 solid-state lidar sensor—which won the 2017 CES Best of Innovation Award. The factory opening makes Quanergy the only company to mass produce solid-state 3D lidar and is a great leap forward toward low-cost, mainstream lidar.

The latest designs for solid-state lidar

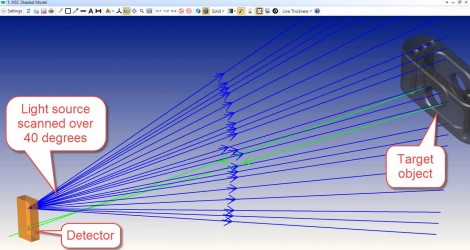

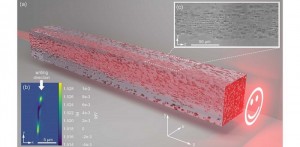

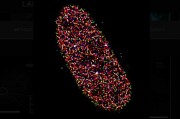

Lidar systems measure the time it takes for a laser pulse, or alternatively a modulated signal, to reflect off a target and return to an optical sensor. The distance to the target is calculated using the one-way time-of-flight multiplied by the speed of light. The laser source scans across the field of view, and the resulting measurements are constructed into an extremely accurate 3D map.

Figure 3. An OpticStudio model showing a light source scanned over 40 degrees toward target object. A small fraction of the source rays are reflected off the target object and collected by the detector.

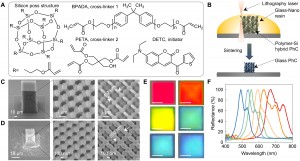

All automotive lidar systems have three main components: a laser light source, scanning or diffusing optics, and a sensor. As indicated by the name, solid-state lidar systems do not utilize any moving parts in the scanning or diffusing optics. There are, however, three different methods in which the solid-state lidar systems scan or diffuse the laser beam across the field of view.

Instead of scanning a narrow beam across the target scene, flash lidar systems use a very bright flash of light to illuminate the entire field of view. This is advantageous because it captures the entire target scene with one flash, much like a camera flash, without any mechanical scanning parts. Capturing the entire scene requires a diffuse and very powerful infrared source. It also requires a very sensitive detector, which can dramatically increase the cost.

A more cost-effective solid-state lidar design uses a phased array to scan a laser beam across the target scene. Phased arrays have been used for decades to steer radio frequencies, and the same concept was more recently applied to optical frequencies using microscale and nanoscale devices on photonic integrated circuits. Like the antenna arrays used in radar systems, the optical phased arrays (OPA) in solid-state lidar systems use a microscopic array of antennas to steer the beam. The delay lines of each optical antenna are tuned to emit light of a specific phase, and the resulting interference can be used to adjust the position and pattern of the output beam. This OPA design is in the S3 lidar system that Quanergy is now mass producing.

Lidar designs with micro-electrical-mechanical systems (MEMS) mirrors are often considered solid-state lidar systems as well. Technically, there are moving parts in the MEMS lidar systems, but there is significantly less mechanical movement than the large spinning mirrors used in traditional lidar systems. This MEMS design is in the lidar systems from LeddarTech.

The intricacies of designing solid-state lidar systems

There are several challenges optical designers face when building solid-state lidar systems for autonomous vehicles. For example, solid-state lidar systems have a more limited field of view than the 360-degree visibility achieved with the rooftop spinning lidar systems. For example, with OPA lidar systems, the best field of view commercially available is about 50 degrees, and MEMS lidar systems are only marginally better at about 60 degrees. Therefore, at least six solid-state lidar systems will be needed to replace one spinning rooftop lidar system. So, for solid-state lidar systems to be commercially viable, the price of multiple solid-state lidar systems must be better than that of a single spinning lidar system.

In addition to increasing the field of view, optical designers are working to maximize the range of automotive lidar systems. The maximum range of the lidar system depends on the laser power, the reflectivity of the target object, and the detector sensitivity. At highway speeds, cars are typically moving 60 miles per hour, or about 30 meters per second. If it takes a car 10 seconds to reach this speed, then the lidar systems should be able to accurately measure targets that are about 30*10 = 300 meters away. Even the OPA and MEMS lidar systems require a powerful source to achieve adequate signal to noise ratios, especially for low-reflectivity targets such as tires or other matte black objects.

If the lidar system is using pulses to measure the time of flight, then the power of the individual pulses can be dynamically increased by decreasing the pulse repetition rate of the laser. However, this only works to a certain extent, because lowering the repetition rate can also degrade the accuracy of the measured range. Assuming the electronics are fast enough, the maximum pulse repetition rate of the laser defines the maximum point density of the data, and thus defines the accuracy of the lidar system. The faster the repetition rate, the denser the data, and the more detailed the spatial information will be.

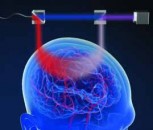

Lidar systems must also take eye safety into account. Most carmakers strive to use Class 1 lasers, which “cannot emit laser radiation at known hazard levels,” according to the Occupational Safety and Health Administration (OSHA). Therefore, the detector must be sensitive enough to work with both eye-safe laser emissions as well as low-reflectivity targets. Most detectors used for automotive lidar today fall into two classes of solid-state photodetectors, either single-photon avalanche diode (SPAD) or silicon photomultipliers (SiPM) due to their improved detection efficiency at longer wavelengths.

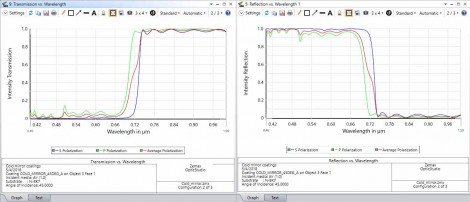

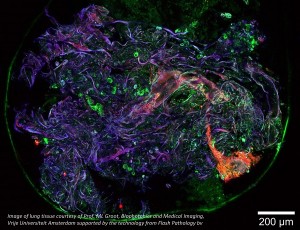

The detectors must also function in bright daylight and handle ambient light levels as high as 98,000 lux (lumens per square meter). To help meet this requirement, lidar designs utilize narrow bandpass filters that only accept wavelengths close to laser light, or they restrict the angle of view.

Figure 4. OpticStudio analyses show a coating with low transmission for wavelengths in the visible spectrum and high transmission for infrared wavelengths.

Most solid-state lidar systems currently available use pulsed lasers to measure the time of flight, but there is an alternative approach that uses frequency modulation (FM) of continuous wave (CW) lasers. The CW laser beam is split into a reference path and a path that is reflected off the target. The frequency of the CW source is changed at a consistent rate, so when the two beams are recombined at the detection optics, the resulting interference can be analyzed to calculate the distance of the target. This technique is showing promising results for improving the range and reducing ambiguity in detected signals. With multiple lidar systems on the vehicle, it likely will be difficult to isolate pulses from the different lidar sources. This ambiguity can be reduced in CWFM lidar systems, because each source can have varying modulation. Furthermore, CWFM lidar will also be able to detect object velocity with a single measurement, because it can include a doppler shift.

Each of these design intricacies comes with budget challenges. Lower cost lidar systems with lower data quality may be adequate for vehicles that operate at lower speeds—and therefore don’t have to react as quickly to obstacles. But with the industry touting the promise of fully autonomous vehicles, lidar systems must be both cost-effective and accurate at high speeds.

Virtual prototyping gets lidar systems to market faster

With cutthroat competition in the lidar industry, optical design teams can’t afford to have product development delays. While designing the intended behavior of optical systems can be straightforward, predicting the as-built behavior and balancing performance with cost requires a comprehensive virtual prototype. All the possible sources of stray light around the lidar systems in autonomous vehicles should be considered. For example, stray sunlight in a lidar system can be treated as a false signal. Or, the signal in a neighboring lidar system may be another source of stray light.

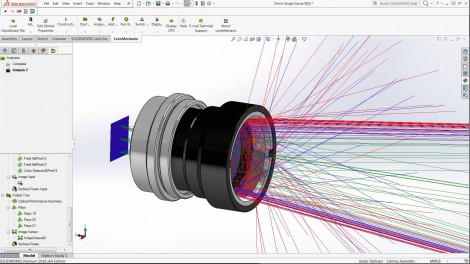

Figure 5. A LensMechanix model showing a stray light analysis of an objective lens and the mechanical housing.

Zemax Virtual Prototyping is the only solution to create a working virtual prototype of the entire lidar system, not just the optical or mechanical components alone. Engineering teams can simulate optical performance on the entire optomechanical product before creating a physical prototype. With Zemax Virtual Prototyping, you can include the effect of traffic signals turning on and off, bicycle lights, and other stray sources.

Zemax Virtual Prototyping reduces design iterations and accelerates design validation by simplifying communication between optical and mechanical engineers, maintaining design fidelity during file handoffs between OpticStudio and CAD platforms, and allowing engineers to catch and correct errors early when changes are easier and less costly. At Zemax, we’ve seen customers cut their development schedules in half using our software—beating their competitors to market.

And in the race for autonomous vehicle market share—a potential $7 trillion “passenger economy” by 2050 according to Intel—every second of the development cycle counts.

Written by Kristen Norton, OpticStudio Product Manager, Zemax

Back to Features

Back to Features