To state that nothing has fundamentally changed in the field of motion picture technology since the end of the nineteenth century might appear to be highly controversial. However, upon closer examination, there is a great deal of truth to the statement.

The first motion pictures, for example, were created by acquiring a number of still images of a scene in rapid succession by a hand cranked camera. After the images were developed, a movie projector displayed the film by projecting successive image frames onto a screen.

Today, of course, the hand cranked camera, the analog film stock and the opto-mechanical film projector have all been replaced by digital counterparts. Nevertheless, capturing a succession of image frames using a camera and then projecting them on a screen still remains the fundamental means by which motion pictures are created and viewed.

In the contemporary machine vision industry, a similar scenario exists. Cameras equipped with high-resolution CMOS imagers also capture sequential frames of data that are then transferred across high speed networks to PCs where the images are processed by sophisticated software algorithms. The software then extracts relevant data from the images in order that the machine vision system can autonomously take action based upon the results.

Existing limitations

But according to Luca Verre, the CEO and co-founder of Chronocam, a French start up founded in 2014, capturing and analyzing images on a frame by frame basis is far from an ideal way to create a system, either for the demanding field of industrial vision or for mobile, battery-powered consumer devices. That is because conventional image sensors that acquire visual information as a series of frames recorded at a fixed rate do so without any relation to the dynamics of the scene and regardless of whether the data in the scene has changed over time. By acquiring data in such a fashion inevitably means that not only is some of the data in a scene lost, but there is also redundancy in the recorded stream of image data.

The fact that redundant data is captured in the image acquisition process means that high bandwidth networks are required to transfer that data to a PC, which must also support the means to store the large amount of data that is generated. The size of the image data created also means that hardware required for post-processing the data increases in complexity and cost. The problem, of course, gets worse as modern image sensors advance to ever higher spatial and temporal resolution.

One approach to dealing with temporal redundancy in video data is frame difference encoding. Here, only pixel values that exceed a defined intensity change threshold from frame to frame are transmitted. However, even when performed at the pixel-level, the temporal resolution of the acquisition of the scene dynamics, as in all frame-based imaging devices, is still limited to the achievable frame rate.

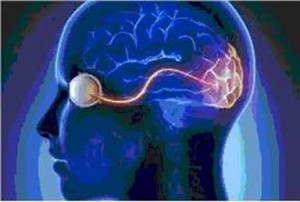

To remove those restrictions, engineers at Chronocam have developed a new type of imager that operates on an entirely different principle to a traditional imager – a principle that closely models the function of the human eye. In the eye, it is the changing levels of light intensity brought about by movements in the visual field, rather than the actual intensity of light itself, that determines whether a signal will be sent to the brain.

Modeling the eye

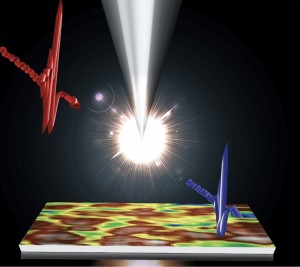

Speaking at the 2017 EMVA Business Conference held in Prague in June this year, Chronocam’s Mr. Verre explained that the data generated by the Chronocam imager is not output as a succession of frames that contain the image information from all the pixels in the imager. Instead, data is transmitted from the imager as an asynchronous stream only if an actual change in light intensity in the field of view of an individual pixel has been detected.

More specifically, after each pixel of the sensor has detected a change and made (at least one) exposure measurement, a grayscale image of the entire scene is available and stored in digital form in an image memory in the system. Subsequently, only pixels that detect changes of light intensity in their field of view update the grayscale values in the memory. As a result, a complete grayscale image of the regarded scene is present in the bit-map memory at all times and is constantly updated at high-temporal resolution and low data rate.

The image information can be taken from the bit-map memory at any time, independently of the image acquisition operation, at arbitrary repetition rates. Producing a sensor that operates in such a fashion inevitably leads to a substantial reduction of the image data that is generated due to the elimination of the temporal redundancy in the picture information that is typical for conventional clocked synchronous image sensors.

Sensor specifics

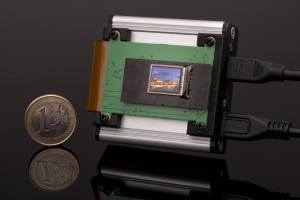

Based on the new technology concept, the company recently released a QVGA resolution (320 by 240 pixels) sensor with a pixel size of 30-microns on a side and quoted power efficiency of less than 10mW. But comparing the performance characteristics of the sensor with traditional CMOS sensors in the field is somewhat difficult, due to the unconventional design of the device itself.

The initial product to debut from Chronocam is a QVGA resolution (320 by 240 pixels) sensor with a pixel size of 30-microns on a side and quoted power efficiency of less than 10mW.

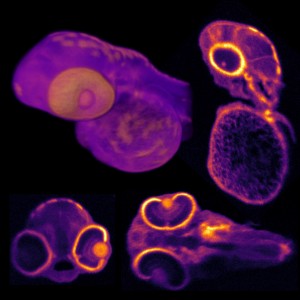

Because the sensor is able to detect the dynamics of a scene at the temporal resolution of few microseconds (approximately 10 usec) depending on the lighting conditions, the device can achieve the equivalent of 100,000 frames/sec. That frame rate would make it highly suitable for many motion capture applications.

In standard image-sensors, the exposure time and the integration capacitance are held constant for the pixel array. For any fixed integration time, the readout value has a limited signal swing limited by the power supply rails that determines the maximum achievable dynamic range which is typically on the order of 60-70dB.

By shifting performance constraints from the voltage domain into the time domain, the dynamic range is no longer limited by the power supply rails.

In Chronocam’s vision sensor, the incident light intensity is not encoded in amounts of charge, voltage, or current but in the timing of pulses or pulse edges. This scheme allows each pixel to autonomously choose its own integration time. By shifting performance constraints from the voltage domain into the time domain, the dynamic range is no longer limited by the power supply rails.

Therefore, the maximum integration time is limited by the dark current (typically seconds) and the shortest integration time by the maximum achievable photocurrent and the sense node capacitance of the device (typically microseconds). Hence, a dynamic range of 120dB can be achieved with the Chronocam technology.

Due to the fact that the imager also reduces the redundancy in the video data transmitted, it also performs the equivalent of a 100x video compression on the image data on chip. As opposed to a frame-based acquisition technology where the data rate is constant, the data rate produced by an event-based vision sensor is scene-dependent -- the more the activity in the scene, the more the data. Hence a 100x “video compression” figure is an average ratio between the data rate produced by a frame-based sensor versus a frame-based sensor.

The low power consumption of the device is achieved due to the fact that each pixel’s acquisition is triggered by a change of light in the scene of view. As long as there is not a significant change of light (with respect to a pre-defined sensitivity threshold), there is no acquisition and therefore the sensor stays in a very low power mode (while being “always-on”). Even in high dynamics scenes, however, the power consumption is significantly lower than in conventional image sensors.

Sensor funding

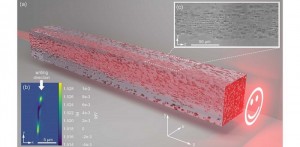

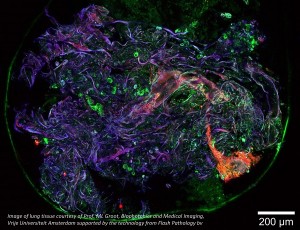

According to Chronocam’s CEO Luca Verre, avoiding the drawbacks of using traditional means to acquire image data will be beneficial for a wide range of artificial vision applications. Hence, Mr. Verre expects to see applications for the new device in industrial high-speed vision, automotive, surveillance and security or robotics as well as biomedical and scientific imaging applications.

In support of its efforts to achieve that goal, last October, the company announced it had raised $15 million in funding from Intel Capital, along with iBionext, Robert Bosch Venture Capital GmbH, 360 Capital, CEAi and Renault Group. One month later, Renault announced that it would be actively investigating how to apply Chronocam’s technology to areas such as collision avoidance, driver assistance, pedestrian protection, blind spot detection and other critical functions to improve safety and efficiency in the operation of both manned and autonomous vehicles.

Written by Dave Wilson, Senior Editor, Novus Light Technologies Today

Back to Enlightening Applications

Back to Enlightening Applications