Surveillance systems are widely deployed across the globe in many disparate environments to monitor the behavior of people. CCTV (closed-circuit television) cameras were the first types of security cameras to be adopted, but newer systems are now using IP-based digital cameras to take advantage of the inherent technical advantages that they offer.

An inexpensive network surveillance system might simply comprise one or more “dumb” network- or IP-based cameras that capture video image data and transfer it to a networked server where the images can be recorded or viewed live on a monitor for analysis by a human operator. In more sophisticated systems, however, the cameras themselves might perform some processing of the images prior to transmission over the network.

Image analysis

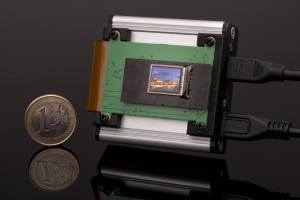

Due to the powerful processors found on many modern IP cameras, an increasing amount of image processing is now performed by the processors in the cameras, with simply the results of the analysis then being passed on to the server. Such image analysis software can perform face detection, object detection, tracking and classification as well as license plate detection and recognition.

Where the software that performs such analysis resides in a system is highly dependent on the cost of the system. While it is quite possible to run image analysis software on the CPU of the camera, in some applications it may be more cost effective to run the software on a centralized server, or, alternatively, use cloud-based resources supplied by third parties to perform such operations.

Many developers would ideally like to be given the option of mixing and matching different models and types of cameras and associated software to create a system to specifically meet their needs. Currently, however, the task of doing so is not straightforward, primarily due to the fact that many surveillance hardware and software suppliers provide their own solutions that may not be interoperable with each other.

A new standard enables interoperability

This year, however, a new standard is set to emerge that should help assuage these concerns, eventually enabling developers to create surveillance systems by choosing the most appropriate hardware and software for a given task, safe in the knowledge that they will interoperate. Indeed, the effort may also act to lower the cost of implementing surveillance systems in the future, just in the way that earlier hardware standards such as HDMI have lowered costs by encouraging interoperability.

The new standard will be the result of work currently being undertaken by the so-called Network of Intelligent Camera Ecosystem (NICE) Alliance, which is spearheaded by Dr. David Lee, the Chairman and CEO of Silicon Valley start-up Scenera. Adding to the impetus behind the standard are endorsements from global sensor maker Sony Semiconductor and camera maker Nikon, as well as Foxconn and Wistron -- original design manufacturers (ODM) that design and manufacture camera modules and software that are then rebranded by others for sale.

According to Dr. Lee, the greatest appeal of software applications that run on contemporary smart phone products from companies, such as Apple and Google, is the ease with which they interoperate. This is in large part due to the fact that the major vendors have created what is known as “ecosystems” to support their products.

“These so-called ecosystems are essentially a collection of software systems - such as operating systems and cloud services software that are developed together to provide a unified environment for software application developers. Once in place, they enable the company’s hardware devices and own software products to be supplemented by applications provided by third party developers. The effect of creating such an ecosystem means that vendors can then provide a greater wealth of applications for the user,” said Dr. Lee.

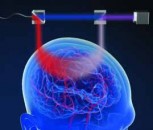

Some surveillance cameras are already available that demonstrate the advantages of the Google ecosystem. One of them, a home surveillance camera, not only captures live video but processes those images to detect motion, while a built-in microphone detects sounds. Data from the phone is then sent wirelessly to software services in the cloud where the data can be stored and processed to analyze the images and sounds that are captured. Using an application running on a smart phone, a user can then be alerted to any suspicious activity in his home through video and audio.

Essentially then, the aim of the founding members of the NICE Alliance is to also create an ecosystem that will enable (ODM) developers of surveillance hardware products such as cameras -- many of whom may not have their own dedicated ecosystems in place -- to reap similar benefits to those that are currently available to commercial phone users.

Use what you need, where you need it

Dr. Lee acknowledges that many surveillance camera manufacturers are now looking at ways to enhance the capabilities of their cameras by enabling them to run software to perform some processing on the video streams of images they capture. And he also recognizes that they would like to be able to take advantage of software available in the cloud to perform software analytics. As such, the goal of the NICE Alliance should result in an open software architecture that would enable users to use just the software elements that they need, where they need them, on any ODM hardware surveillance platform.

The members of the NICE Alliance also plan to address how to deal with the variety of hardware that is available on the market. On the IP-based digital front alone, many different types of surveillance cameras are available. Some, for example, are designed specifically for monitoring a scene in a fixed direction, while others, such as fixed dome cameras equipped with motorized zoom lenses, allow an operator to remotely pan, tilt, and zoom the camera. More specialized designs, such as day/night cameras, feature IR filters for use in low light conditions, while thermal imaging cameras can be deployed to capture images in dark or harsh environments.

According to Dr. Lee, cloud-based applications will need to be able to interrogate the camera to determine its hardware functionality to determine whether application software in the cloud will be able to take advantage of that functionality. He also acknowledges that a means must be provided in the cloud to equalize the technical disparities between the cameras. While a camera equipped with a powerful CPU may be able to perform number plate recognition, for example, the data captured from another less sophisticated unit may need to be uploaded to the cloud for processing.

Once that kind of interplay between the capabilities of the camera and the application have been well defined, it may increase the lifetime of existing cameras, reducing the obsolescence that occurs when older cameras need to be replaced with newer, more sophisticated cameras, Dr. Lee added. In such cases, older camera products that do not have the processing power to perform advanced functions such as facial recognition, for example, could still be used if that application could be moved to the cloud.

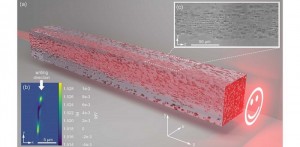

Dr. Lee noted that a layered software model will be developed to achieve the goals of the consortium of industry partners. The software model will comprise many new abstraction layers that will define the means by which images are captured and processed. In addition, it should hide the implementation details of camera hardware to enable interoperability between cameras, applications software residing on those cameras and cloud based solutions.

By Dave Wilson, Senior Editor, Novus Light Technologies Today

Back to Features

Back to Features