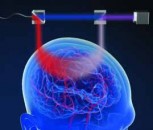

What if computer screens had glasses instead of the people staring at the monitors? That concept is not too far afield from technology being developed by University of California, Berkeley (UC Berkeley) computer and vision scientists.

The researchers are developing computer algorithms to compensate for an individual’s visual impairment, and creating vision-correcting displays that enable users to see text and images clearly without wearing eyeglasses or contact lenses. The technology could potentially help hundreds of millions of people who currently need corrective lenses to use their smart phones, tablets and computers. One common problem, for example, is presbyopia, a type of farsightedness in which the ability to focus on nearby objects is gradually diminished as aging eyes’ lenses lose elasticity.

More importantly, the displays could one day aid people with more complex visual problems, known as high-order aberrations, which cannot be corrected by eyeglasses, said Brian Barsky, UC Berkeley professor of computer science and vision science and affiliate professor of optometry.

According to Barsky, who is leading the project, displays are now ubiquitous and being able to interact with displays is taken for granted. This research could transform the lives of people with high-order aberrations. Barsky is passionate about that potential.

Using computation to correct vision

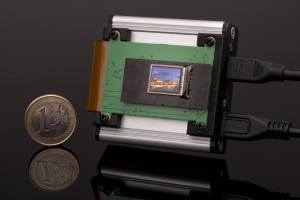

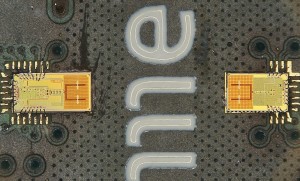

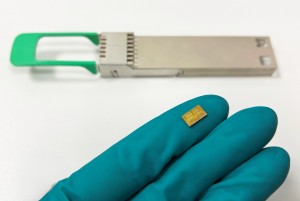

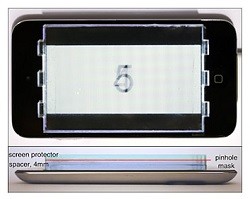

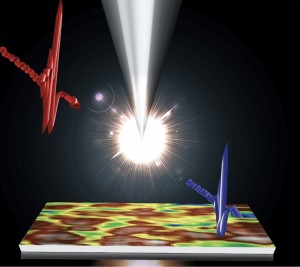

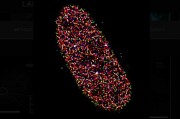

The UC Berkeley researchers teamed up with Gordon Wetzstein and Ramesh Raskar, colleagues at the Massachusetts Institute of Technology (MIT), to develop their latest prototype of a vision-correcting display. The setup adds a printed pinhole screen sandwiched between two layers of clear plastic to an iPod display to enhance image sharpness. The tiny pinholes are 75 micrometres (µm) each and spaced 390µm apart.

Researchers explain the science behind new vision-correcting display technology being developed at UC Berkeley. (Video by Fu-Chung Huang)

The research team will present this computational light field display at the 41st International Conference and Exhibition on Computer Graphics and Interactive Techniques (SIGGRAPH) on 12 August 2014 in Vancouver, Canada.

According to lead author Fu-Chung Huang, who worked on this project as part of his computer science PhD dissertation at UC Berkeley, instead of relying on optics to correct your vision, this project uses computation. It is a very different class of correction and it is non-intrusive.

The algorithm, which was developed at UC Berkeley, works by adjusting the intensity of each direction of light that emanates from a single pixel in an image based upon a user’s specific visual impairment. In a process called deconvolution (i.e., an algorithm-based process used to reverse the effects of convolution on recorded data), the light passes through the pinhole array in such a way that the user will perceive a sharp image.

This technique warps the image so that when the intended user looks at the screen, the image will appear sharp to that viewer, said Barsky, but if another viewer were to look at the image, it would seem strange.

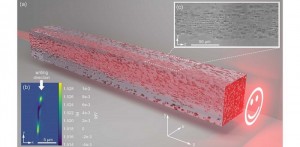

In the experiment, the researchers displayed images that appeared blurred to a camera, which was set to simulate a farsighted person. When using the new prototype display, the blurred images appeared sharp through the camera lens.

This latest approach improves upon earlier versions of vision-correcting displays that resulted in low-contrast images. The new display combines light field display optics with novel algorithms.

The blurred image on the left shows how a farsighted person would see a computer screen without corrective lenses. In the middle is how that same person would perceive the picture using a display that compensates for visual impairments. The picture on the right is a computer simulation of the best picture quality possible using the new prototype display. The images were taken by a DSLR camera set to simulate hyperopic vision. (Photo by Houang Stephane/flickr; modified by Fu-Chung Huang/UC Berkeley)

Huang, now a software engineer at Microsoft Corp. in Seattle, Washington (US), noted that the research prototype could easily be developed into a thin-screen protector and that continued improvements in eye-tracking technology would make it easier for the displays to adapt to the position of the user’s head position.

The future hope is to expand this application to multi-way correction on a shared display, so users with different visual problems can view the same screen and see a sharp image, said Huang. The National Science Foundation helped support this work.

Related information:

• Eyeglasses-free Display: Towards Correcting Visual Aberrations with Computational Light Field Displays (Paper in ACM Transaction on Graphics)

Top of page: Researchers placed a printed pinhole array mask on top of an iPod touch as part of their prototype display. Shown above are top-down and side-view images of the setup. (Photo courtesy of Fu-Chung Huang)

Back to News

Back to News