The autonomous vehicle market is on an upward trajectory, with global revenue expected to grow at a CAGR of nearly 40% through 2027, reaching $126.8 billion, according to the report Autonomous Vehicle Market by Research and Markets. With growth like this anticipated in the next 9 years, it’s no wonder that the leading automotive manufacturers and technology firms are wanting to be part of this market. The supply chain serving this market will be robust and demanding - with quality control held to the strictest standards. The opportunities are boundless for the photonics industries whose technologies such as vision systems and lidar sensing, that bring the autonomy to autonomous driving.

Vision systems watch the road

In August of this year Intel Corporation acquired Mobileye, a company that specializes in computer vision and machine learning, data analysis, localization and mapping for driver assistance systems and autonomous driving. Mobileye uses a proprietary artificial vision sensor to view the road and identify information about things in the road like vehicles and pedestrians, and about lane markings and speed limit signs.

The system gathers information and measures the distance and relative speed of the vehicle in relation to other vehicles and objects in the road, the location of the vehicle relative to lane markings and the speed of the vehicle. Then it determines if there’s a potential danger and warns the driver with visual and audible alerts.

Note the vast amount of information the system can provide – free space (green carpet), vehicle and pedestrian detection, traffic sign recognition, lane markings – for the vehicle to understand and negotiate the driving scene.

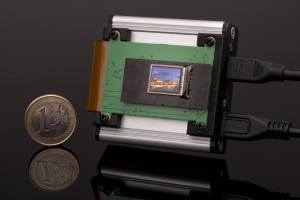

At the heart of Mobileye’s vision system is the EyeQ sensor. This system on chip (SoC) has the bandwidth to stream and process surround cameras, radar and lidar systems used in Advanced Driver Assistance Systems (ADAS) and is progressing toward fully autonomous driving. Mobileye’s technology will be implemented by Intel’s Automated Driving Group, which is developing cloud-to-car solution for the automotive market. BMW is one automaker working with Intel on an autonomous vehicle, intended to be put into serial production in 2021.

Mobileye isn’t the only company with autonomous driving solutions. AdaSky has been developing thermal cameras for civil and military use for years and it is now introducing the Viper, which is a far-infrared (FIR) sensor that has built-in machine learning software.

It has no moving parts, using shutterless technology. It captures differences in temperature of 50mK, so it can detect living creatures, cars and other man-made objects. It doesn’t illuminate the scene or create interference with other systems or the environment, and it’s also not blinded by oncoming headlights or direct sunlight.

Hella Aglaia and NXP recently announced that they are expanding their current ADAS automotive vision platform with artificial intelligence capability. The platform is not closed and proprietary like many of today’s other vision platforms, so system integrators are able to innovate and combine the best sensor technology and software sources.

The next-generation processor, available in 2018, will enable driving functions such as pixelwise classification, semantic path finding and vehicle localization functions.

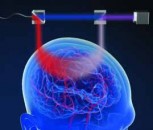

Lidar sensing

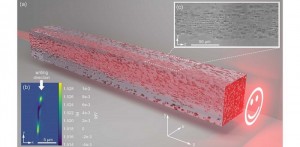

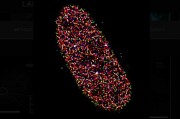

Lidar stands for light detection and ranging and is commonly used for atmospheric mapping and meteorology. It is being used in autonomous technologies for detecting stationary objects, imaging it in three dimensions and measuring distance. The trend in lidar today is solid state lidar, which has no moving parts - making it more reliable for the automotive industry.

Waymo, which was formerly the Google self-driving car project, has its own in-house lidar team that is developing three types of lidar: short-range, long-range and high-resolution. Short-range lidar is positioning so the car can have a surround view down low and all around the car. Long-range lidar helps understand objects or signals from far away, such as hand gestures.

And high-resolution lidar sends out millions of laser points every second to build a high-resolution 3D picture that helps navigate city streets filled with cars, pedestrians, bicyclists and more. The three work together in an integrated system, complementing one another and adding a new level of imaging, bolstering the information provided by cameras and radar.

Sensata and Quanergy recently announced the S3, another type of lidar sensor. The S3 uses the Optical Phased Array principle to scan the field of view.

This sensor that has no moving parts can also scan just the areas of interest on demand through dynamic laser beam positioning, which offers an advantage over going sequentially through the entire field of view.

Opportunities abound

Laser Components has been in the photonics industry for 35 years and the first of its products recently achieved qualification for use in the automotive industry. Its recently released Pulsed Laser Diodes with a wavelength of 905nm are key components of the lidar technology in autonomous driving. This is just one of the many examples of long-established optics, component and other manufacturers that are working on technologies to serve the burgeoning autonomous market.

Written by Anne Fischer, Managing Editor, Novus Light Technologies Today

Back to Features

Back to Features